Introduction

Overview

Teaching: 20 min

Exercises: 0 minQuestions

What is RECAST and how can it help me make the most of my analysis?

How do docker and gitlab work together to preserve your analysis code and operating environment?

What is needed to fully automate and preserve the analysis workflow?

Objectives

Understand the motivation for incorporating RECAST into your analysis

Familiarize yourself with the three key components of RECAST

Introduction

This morning’s docker tutorial introduced you to containerization as an industry-standard computing tool that makes it quick and easy to bring up a customized computing environment to suit the needs of your application. In this tutorial, we’ll explore how ATLAS is leveraging the power of containerization for data analysis applications. You’ve already seen that standard ATLAS computing tools can be packaged into the atlas/analysisbase docker image, which lets you develop an analysis on your local machine. Now we’ll see how ATLAS is using docker and gitlab to preserve and re-interpret ATLAS analyses using a tool called RECAST (Request Efficiency Computation for Alternative Signal Theories).

Plausible and Likely Scenario

To understand why analysis preservation, and RECAST in particular, is useful, consider that it can take months or even years for a multi-person analysis team to develop cuts that optimally carve out a phase space sensitive to the model they want to test, and estimate the standard model backgrounds and systematics in this phase space.

Years later, other physicists may dream up new theories leading to alternative models that would show up in the same or similar phase space. Since the cuts and standard model background estimates won’t be affected by considering different signal models in the same phase space, it would probably be way faster for them just to make some tweaks to the original analysis to re-run it with the new models. But the original analysts have moved on, and even if they can dig up the analysis code, they may not remember exactly how to use it or what sort of environment they were running it in.

This is where RECAST comes in! RECAST, initially developed during Run1 of the LHC is a part of a broader effort called REANA which aims to improve the reproducibility of particle physics data analysis. These efforts are all part of a broader effort of analysis preservation ongoing at CERN. RECAST in particular, the tool you will be learning about here, automates the process of passing a new signal model through an analysis at the time that the analysis is being developed. The idea is that the analysis can then be trivially reused at any time in the future to re-interpret new signal models in the phase space that it so painstakingly revealed.

RECAST in Action

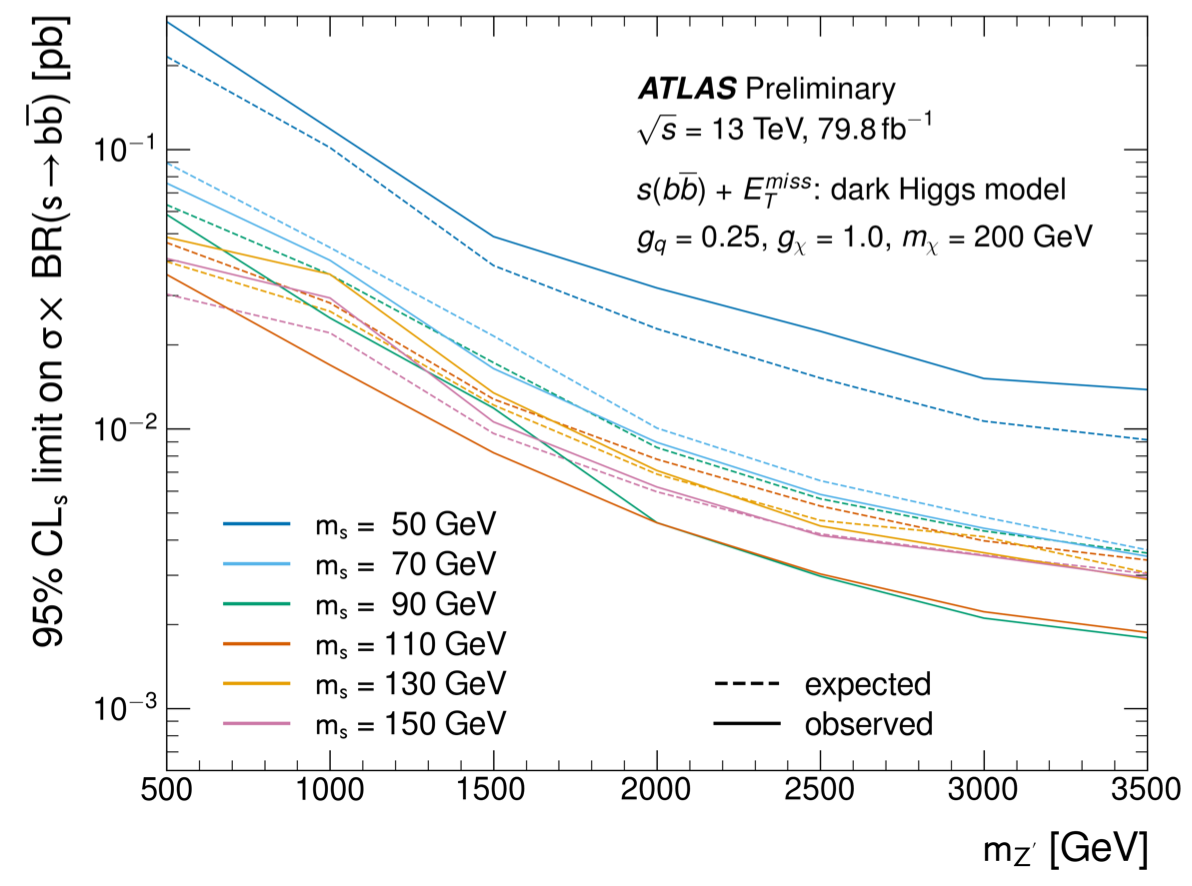

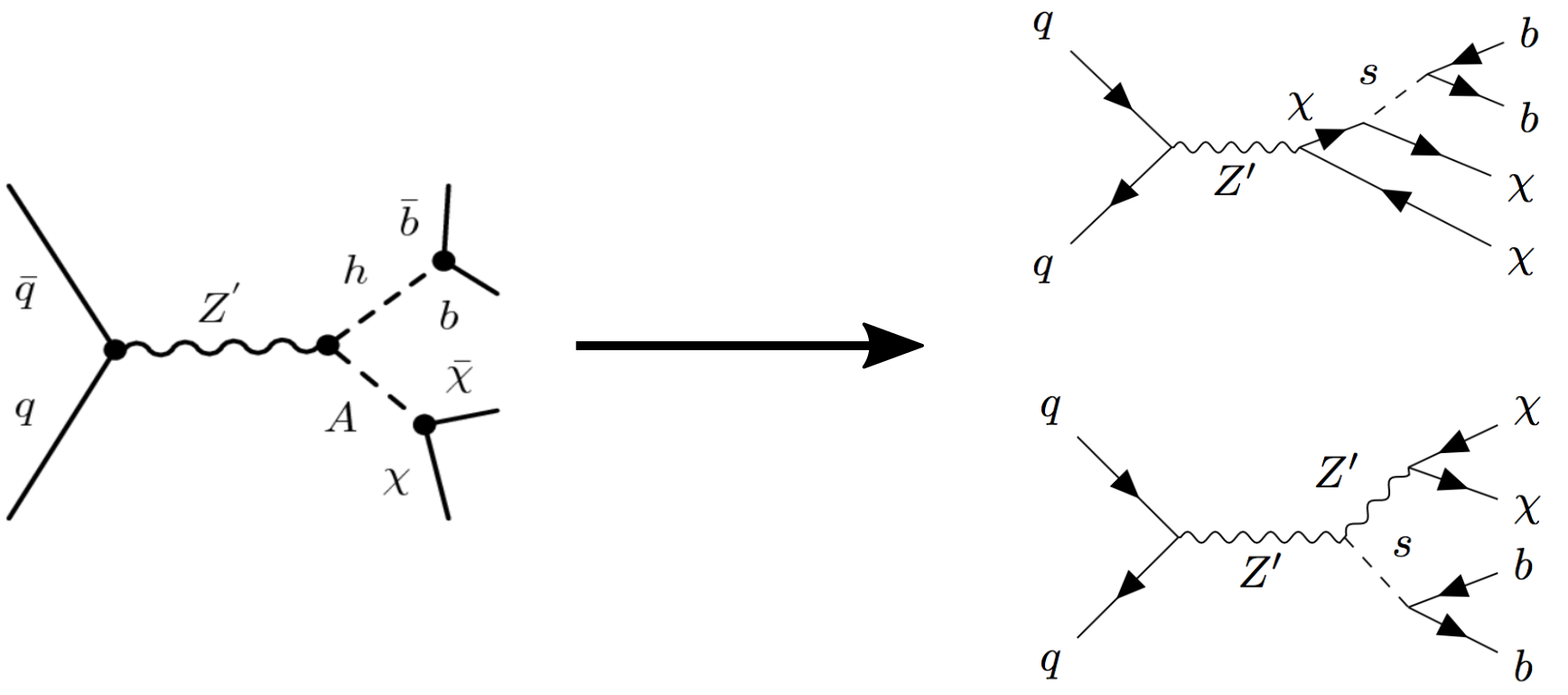

All this RECAST business may seem like a thing of the future in ATLAS, and up until recently it sort of was. But, as of last week, it is officially a thing of the now! The mono-Hbb dark matter search, which looks for dark matter production in association with a Higgs boson decaying to a pair of b-quarks (not so different from our VHbb signal actually…) was recently re-interpreted (link to paper) in the context of replacing the standard model Higgs decay with a dark sector Higgs decaying to two b-quarks. This re-interpretation was done using the RECAST framework set up by the mono-Hbb analysis!

Three Key Components of RECAST

Analysis code preservation

Analysts write a custom code framework that they pass data and signal/background MC through to search for a particular physics model in the data. The first part of analysis preservation is therefore to preserve this code framework. Gitlab is great for this. It fully preserves not only the final version of the code, but also every single version and offshoot of the code that was ever used by the analysts.

Environment preservation

But as we’ve seen, the code framework is far from standalone. It relies on having specific libraries, compilers, and even operating systems in place, and it may be really fussy about the exact versions of all these dependencies. So the second part of analysis preservation is to capture the exact environment in which the code was run by the original analysts. This is where docker comes in.

ATLAS has developed version-controlled docker base images that encapsulate the OS, compilers, standard libraries, and ATLAS-specific dependencies commonly used in ATLAS analyses. You’ve already had a chance to work with one of them, atlas/analysisbase. Individual searches design custom Dockerfiles in the Github repo(s) to add the analysis code to the base image, along with any analysis-specific dependencies, and then build the code to produce the exact environment needed to run it.

Automated re-interpretation

The third and final piece of analysis preservation is to fully automate the steps that an analyst would go through in order to pass a new signal model through the analysis chain to arrive at the new result. This is accomplished using a tool called yadage, which allows the user to codify each step of the analysis, which analysis environment (i.e. container) a given step needs to run in, and how each step fits into the overall analysis workflow to arrive at the final result.

Other RECAST resources

In addition to this tutorial, there are many other resources available for RECAST, including:

RECAST documentation

- Excellent RECAST documentation written by Lukas Heinrich, which is borrowed from extensively for this tutorial.

Slides from past tutorials

- Exotics Tutorial 2018-11-19

- Exotics Tutorial 2018-11-12

- SUSY Tutorial 2018-11-08

- Docker Tutorial Induction Week 2019-01-31

- GitLab Tutorial Induction Week 2019-01-31

Communication channels

- Mattermost Channel

- Mailing list: atlas-phys-exotics-recast@cern.ch

- Discourse

Talks

Key Points

RECAST preserves your analysis code and operating environment so it can be re-interpreted with new physical models.

Gitlab enables full preservation of your analysis code.

Docker makes it possible to preserve the exact environment in which your analysis code is run.

Your analysis workflow definition automates the process of passing an arbitrary signal model through your containerized analysis chain.